One of the great benefits offered by the social web is the open way in which people can connect, not only via the obvious means of social networks but also through engaging in discourse and conversation in the commenting sections of websites, blogs, etc.

Open conversation and the sharing of different points of view are foundational activities for many online publications, from mainstream media sites to individual blogs.

To many, enabling readers, visitors and communities to comment on articles, reports, posts, etc, is the whole point of being online: to engage in conversation; to surface different points of view and opinion; to foster connectivity between people that helps enable greater understanding of others’ perspectives, and advance the conversation.

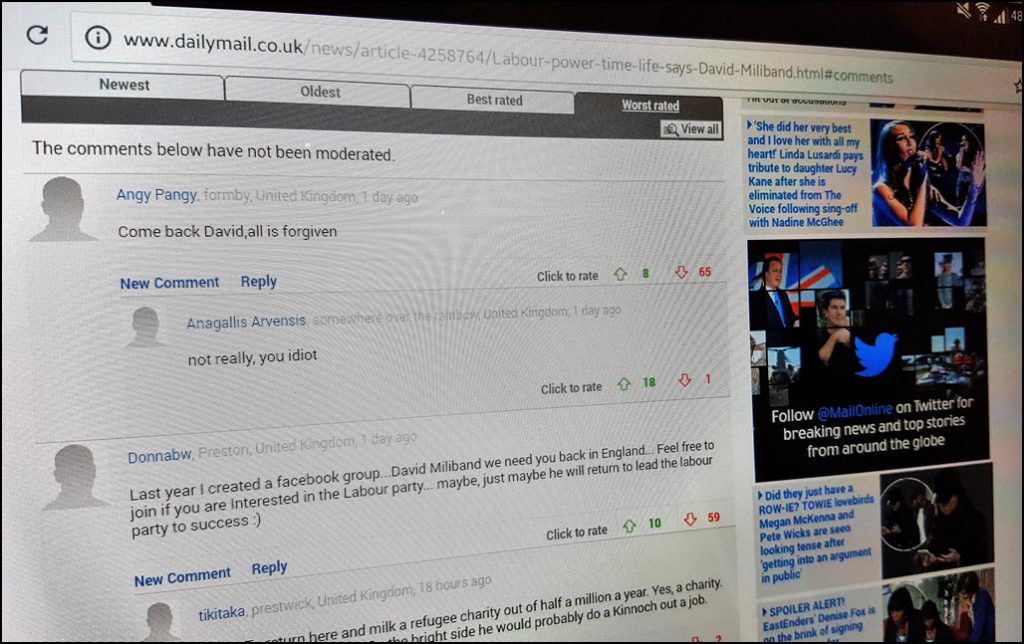

Over the past few years there’s been a movement towards more control over commenting, especially by the mainstream media in response to the abuse of privilege (as many would see it) demonstrated by some people with their anti-social behaviours: trolling, cyberbullying, and spam to name but three examples.

That movement ranges from outsourcing the management of commenting to third-party commenting services, to eliminating commenting entirely in some cases.

It’s a double-edged sword, though – outsource your commenting and you can weaken your domain presence; remove commenting entirely, and you also eliminate that foundational element of conversation especially if you also remove the ability of connecting comments on external places (social networks like Twitter and Facebook, for instance) to your content.

Given the sheer scale of the problem to constantly weed out comments and commenters that a site owner or publisher regards as undesirable, it’s not hard to see the barriers to entry in addressing the problem are huge when you look at it from the perspective of people doing it especially at scale.

It seems to me that the barriers are insurmountable unless there is way in which we can automate the process of deciding what’s undesirable and what isn’t: what get’s deleted and what gets posted. We need a way that augments our own abilities to make the yes/no decisions, and make the process all-embracing: adding emotion to the logic.

If ever there was a repetitive task crying out for an algorithm, this is it!

Augmenting Intelligence

Google has a potential solution with Perspective, an artificial intelligence tool that identifies abusive comments online, and eliminates those barriers I mentioned earlier.

The Financial Times reports that Perspective is being tested by a range of news organisations, including The New York Times, The Guardian and The Economist, as a way to help simplify the jobs of humans reviewing comments on their stories.

Other organisations in the testing programme include Wikipedia, the BBC and the FT itself.

How it works is quite straightforward says the FT:

Perspective helps to filter abusive comments more quickly for human review. The algorithm was trained on hundreds of thousands of user comments that had been labelled as “toxic” by human reviewers, on sites such as Wikipedia and the New York Times. It works by scoring online comments based on how similar they are to comments tagged as “toxic” or likely to make someone leave a conversation.

In essence, Perspective analyses lots of data at scale, accurately and very quickly (relative to humans doing it), then presents the results of its analysis to comment moderators to enable them to confidently and quickly approve or deny a comment.

Part of [Google’s] broader Conversation AI initiative, Perspective uses machine learning to automatically detect insults, harassment, and abusive speech online. Enter a sentence into its interface, and Jigsaw says its AI can immediately spit out an assessment of the phrase’s “toxicity” more accurately than any keyword blacklist, and faster than any human moderator.

Google says Perspective makes it easier to host better conversations:

The API uses machine learning models to score the perceived impact a comment might have on a conversation. Developers and publishers can use this score to give realtime feedback to commenters or help moderators do their job, or allow readers to more easily find relevant information.

Google made Perspective openly available for anyone to use it just last week and says it’s early days in its development (“We will get a lot of things wrong,” they add).

What could come as Perspective develops further, I wonder? The ability to become the moderator? Seems to me to be a logical step in its evolution.

In any case, this is a great pointer to how artificial intelligence in the form of an API and machine learning can measurably help people perform a task more quickly and accurately than before, learning as it goes.

Indeed, a great example of augmented intelligence.